Levi Rybalov

Founder at Arkhai, researching at the intersection of distributed computing, game theory, and agentic systems.

Hi, I'm Levi. I'm the founder of Arkhai, where we build the foundation for the machine economy. My background is in game theory, distributed computing, autonomous agent systems, energy-water and energy-compute economics, and digital twins and simulations. At a high level, my work focuses on designing incentive structures in machine economies.

2024 - Present

I started a company now called Arkhai, which creates building blocks for the machine economy.

Problem

The future of commerce is agentic, but our marketplaces are built for humans. An economy where the primary buying and selling choices are made by agents requires a rethinking of how discovery, market-making, and settlement occur.

Solution

Building blocks to create different kinds marketplaces easily. This saves time and effort for creating marketplaces.

This is decentralized finance; it differs from traditional "DeFi" in that we do not default to using automated market makers, auctions, or solvers, and instead focus on mass-scale bilateral and multilateral negotiation for market-making. This of course does not preclude use of the former - rather we are starting from a different perspective

2022 - 2023

Worked on some cool crypto stuff, including protocols to improve incentives in academic environments using prediction markets, the economics of long-term data storage, and mechanism design for distributed computing. The most interesting work was in the game theory of optimistic verification at Protocol Labs.

Research

Problem: in a two-sided marketplace for compute, how can the buyer/outsourcing party be convinced that the seller behaved honestly and returned the correct result, and where the only tool available to check whether the solution is correct is to redo the computation (under the assumption of reproducibility)?

The existing literature on the problem did not address real-world conditions - almost all of the existing research relied on analytic solutions to find the desirable equilibria and so on, but the underlying issue is that it made assumptions that didn't hold in the real world, meaning the mathematical guarantees they provided would break down in the real world.

I proposed a more empirical framework [14], akin to game-theoretic white-hat hacking, where agents are trained using multi-agent reinforcement learning to maximize their utilities in these environments, and then testing various anti-cheating mechanisms to see which of them were robust enough [23] [24].

2017 - 2021

In the summer of 2017 I discovered BOINC and Gridcoin. The former is a distributed computing platform that grew out of SETI@home, and which connected scientists requiring massive embarrassingly parallel compute resources with volunteers who had idle computing resources. Gridcoin is a cryptocurrency that rewarded contributions to scientific computing projects like SETI@home, BOINC, and Folding@home. I became really interested in its reward structure and started doing independent research in mechanism design for distributed computing [9].

Research

How to design a "get out what you put in"-style reward rule for scientific computing projects considering almost any kind of computation using almost any subset of almost any kind of computer [3] [4] [5]. Similar to Bitcoin's reward mechanism, except replacing the proof-of-work puzzle with scientific computations.

I discovered a generalization of Bitcoin's proportional allocation rule [12] to highly heterogeneous hardware and software environments [6]. The reward mechanism used a compute index over the theoretical maximum output of the network of machines on every different type of task distributed on the network. The core algorithm incentivizes using hardware in the manner which maximizes useful work done relative to the capabilities of the rest of the network, and is intrinsically related to the energy consumption of the machines on the network.

This involved messing around with GPUs a bit, putting together two GPU servers from scratch (AMD FirePros + 1028 Series from Supermicro for maximizing double-precision FLOPS for the cost), bought a milk crate mining rig, and SBC (Odroid). When I was selling the consumer cards, I also learned how to refurbish GPUs, which was fun. I played around a bit with CUDA/OpenCL, translating the 2D Ising model from CUDA to OpenCL [34].

The good old days

2013 - 2018

At the time my primary motivation was environmentalism. I was in university and majored in physics, chemistry, and math, originally intending to do research in materials science for green tech. I tried out research in the intersection of computer science and environmental engineering, which is how I fell in love with algorithms.

Research

The main topic of my research was how to optimize power plant production on river networks [1] [10] under thermal pollution constraints. Power plants on river networks heat up the water they use for cooling, and that impacts downstream plants, and there are also legal and environmental limits on the temperature the river can reach.

I discovered algorithms that were optimal in the case of linearly adjustable power plant production, and came up with generalizations of the Knapsack problem for the discrete case.

Lessons

Many environmental problems indeed needed better technological solutions, but many of society's problems more generally stem from bad incentive structures.

Other

I did some research in biochemistry, designing algorithms to search for chemical compounds in large databases [11].

In my personal capacity, I became very interested in game theory and its applications in solving human coordination problems in cooperative contexts.

References

- Optimizing regional power production under thermal constraints (2016) - IEEE Xplore

- Ranking Projects + Nash Equilibrium (2017) - Steemit

- FLOP and Energy Based Model for Gridcoin (2017) - Steemit

- Why Are Incentives Important? (2017) - Steemit

- Towards Incentive-Compatible Magnitude Distribution (2017) - Steemit

- Internal Hardware Optimization, Hardware Profiling Database and Dynamic Work Unit Normalization (2017) - Steemit

- Multi-Currency Economy and Gridcoin as the World's Largest Decentralized Green Energy Powered Supercomputer (2017) - Steemit

- Expected Time to Stake in Proof-of-Stake (2018) - ResearchGate

- Blog posts on game theory and cryptoeconomics (2018-2019) - @ilikechocolate on Steemit

- Maximizing power production in path and tree riverine networks (2019) - ScienceDirect

- Metabolic Profiling of Acer truncatum (2019) - ACS Publications

- Incentivizing Energy Efficiency and Carbon Neutrality in Distributed Computing Via Cryptocurrency Mechanism Design (2022) - ResearchGate

- Whitepaper v1 (2022) - Google Docs

- An adversary-first approach to game-theoretic verifiable computing (2023) - YouTube

- Game Theory in Distributed Computing (Boston, May 2023) - YouTube

- Multi-Agent Systems and Filecoin (2024) - YouTube

- Tokenization of Latent Computing Power (ETH Global, 2024) - YouTube

- The Tokenization of Latent Computing Power (Encode, 2024) - YouTube

- Crypto x AI Mini Conference: Autonomous Agent Applications (2023) - YouTube

- NERD Labs Hacker House: Day 2 (2024) - YouTube

- DeSci London Day 2 (2023) - YouTube

- Talk at CERN (2024) - CERN Indico

- Iceland Talk (2023) - YouTube

- CoopHive Technical Documentation (2023) - CoopHive Docs

- Whitepaper v2 (2023) - Google Docs

- Original Bacalhau notes (2023) - Overleaf

- Bacalhau notes v2 (2023) - Overleaf

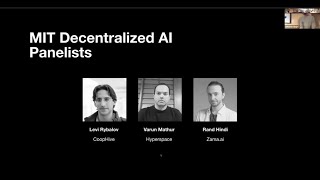

- MIT Decentralized Roundtable (2023) - Overleaf

- Distributed Computing and Public Goods (2023) - Overleaf

- Agents Unleashed Bangkok - The Future of Autonomous AI Agents (2024) - YouTube

- Agents Unleashed ETHCC - Autonomous Agent Economies (2024) - YouTube

- DeSci Sidepaper (2024) - Google Docs

- MIT Decentralized Roundtable (2024) - YouTube

- 2D Ising Model CUDA to OpenCL - GitHub